Is There an Offline ChatGPT? 7 Offline Tools For Your Needs

Using a sophisticated language model like ChatGPT without an internet connection is appealing to many users, especially privacy-conscious individuals or those with limited access to the web.

Given the widespread reliance on the internet for modern applications, it’s natural to ask: Is there an offline ChatGPT? If not, what alternatives can be used without internet connectivity?

Let’s see whether ChatGPT or similar tools can be used offline, discuss the challenges of creating offline models, and present alternatives offering offline functionality.

Is There an Offline Version of ChatGPT?

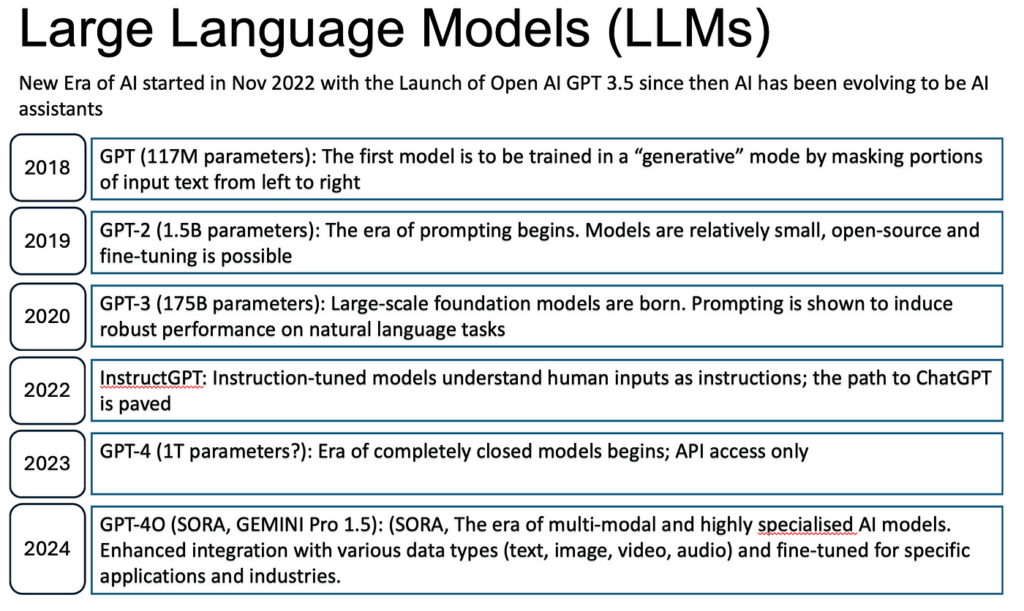

As of now, ChatGPT itself does not offer a fully offline version. This popular model, developed by OpenAI, requires significant computational power and access to a cloud infrastructure that processes inputs and generates responses in real-time.

These AI models are hosted on powerful servers that rely on internet connectivity, making it difficult to provide a portable, offline version that operates at the same level of sophistication.

Although there is no direct offline version of ChatGPT, some tools provide similar functionalities and can be used without internet connectivity.

Offline Alternatives to ChatGPT

While ChatGPT requires internet access, several offline language models and tools offer similar features, particularly for text generation and natural language processing.

These alternatives are generally smaller in size, capable of running on local machines, and offer varying levels of functionality depending on the user’s needs.

Below are some of the best offline alternatives for users looking to engage with a model without needing an internet connection:

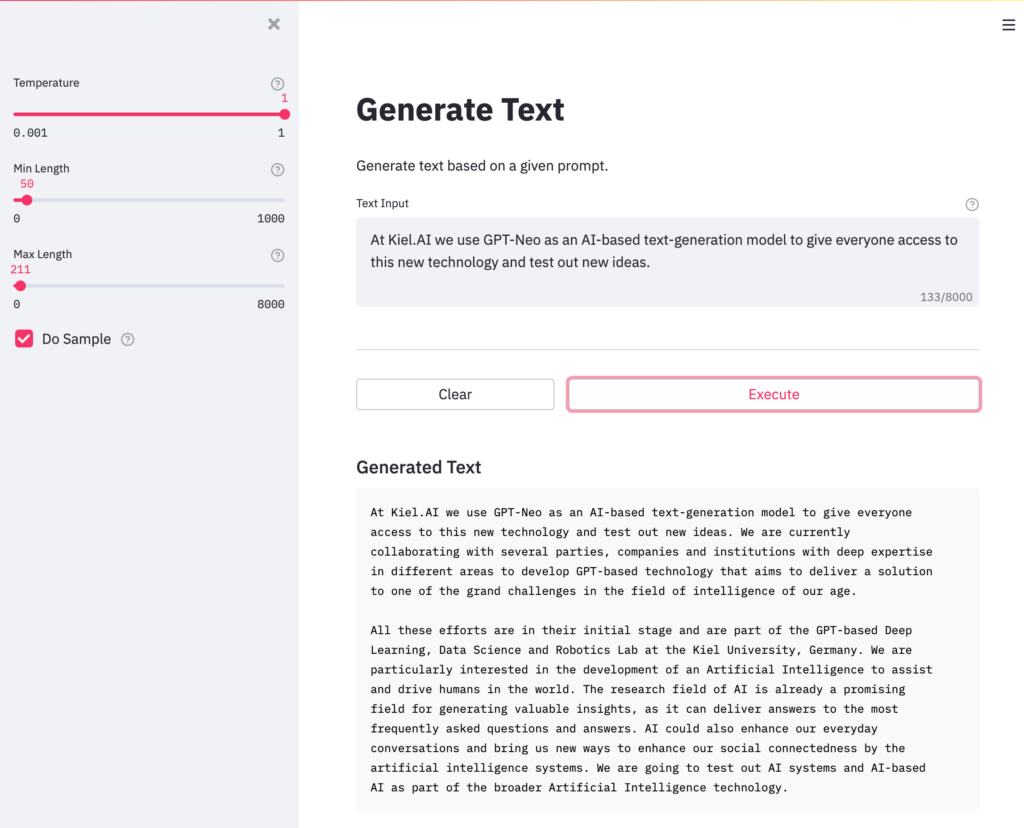

1. GPT-Neo by EleutherAI

GPT-Neo is one of the closest open-source alternatives to GPT models like ChatGPT. While it doesn’t match the full capabilities of the latest versions of ChatGPT, it is designed to work on local machines if you have the necessary hardware.

GPT-Neo can be downloaded and run on high-powered personal computers, though users need enough GPU power and storage.

Key Features:

- Open-source alternative to GPT models.

- Requires substantial computational power, like a powerful GPU or cloud-hosted solution, but can theoretically be run offline.

- Best for users with technical knowledge who are willing to configure the environment.

Who Should Use It: GPT-Neo is suitable for developers or tech-savvy users who are familiar with machine learning frameworks and have the right hardware setup.

2. GPT-J by EleutherAI

GPT-J is another offline-capable language model from EleutherAI, which can be used for text generation and processing tasks.

It’s slightly more advanced than GPT-Neo and closer to GPT-3 in terms of capabilities, but like its sibling, GPT-J also requires significant computational resources.

Key Features:

- Open-source and capable of running locally on high-end hardware.

- Performs well for text-based tasks like summarization, completion, and translation.

- Offline use is possible with sufficient resources (i.e., strong GPUs and lots of RAM).

Who Should Use It: This model is ideal for data scientists, researchers, or enthusiasts who can handle the technical setup and have the required hardware.

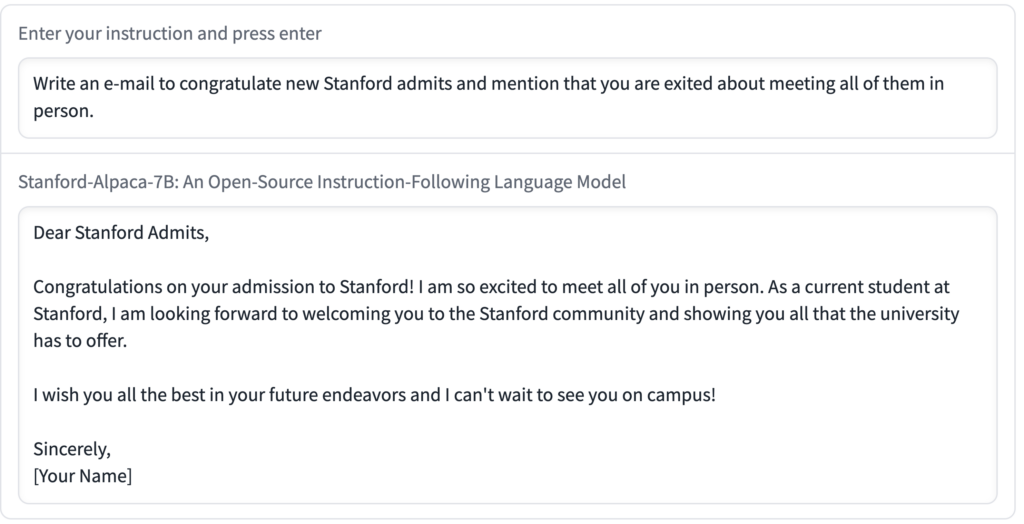

3. Stanford Alpaca

Stanford’s Alpaca model is a smaller, more accessible alternative for offline use. It’s a fine-tuned version of the LLaMA model and can perform many tasks similar to GPT-3, including language generation and question-answering, albeit at a reduced scale.

With the right configuration, Alpaca can run offline, making it an appealing option for users who don’t need real-time internet access.

Key Features:

- Smaller in size compared to GPT-J and GPT-Neo.

- It can run on more modest hardware setups.

- Supports text completion, summarization, and other language-based tasks.

Who Should Use It: Alpaca is better suited for users who want a balance between performance and resource demand, as it can run on lower-end systems while still offering good functionality.

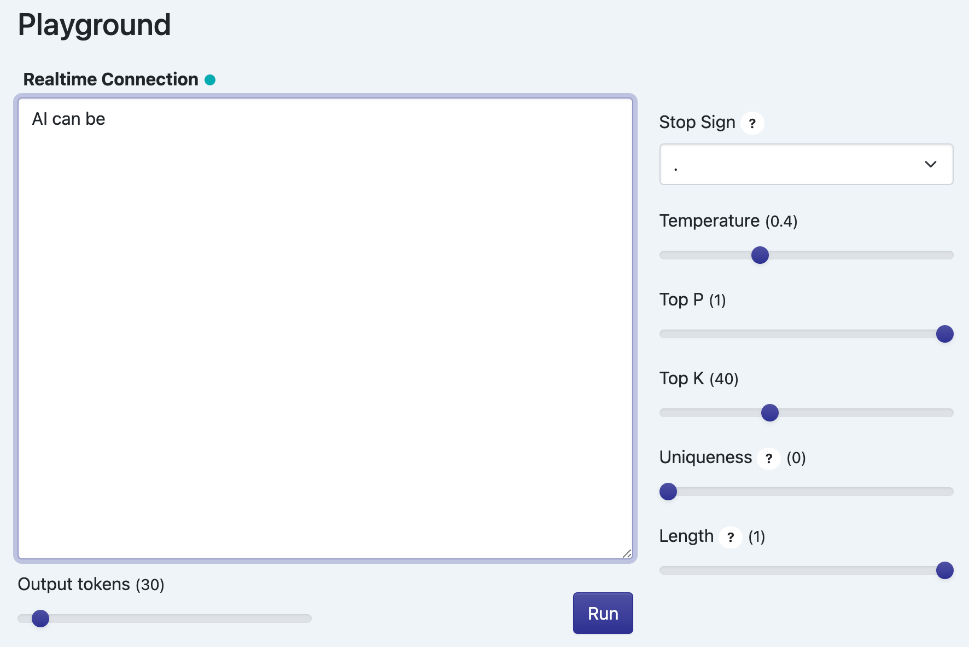

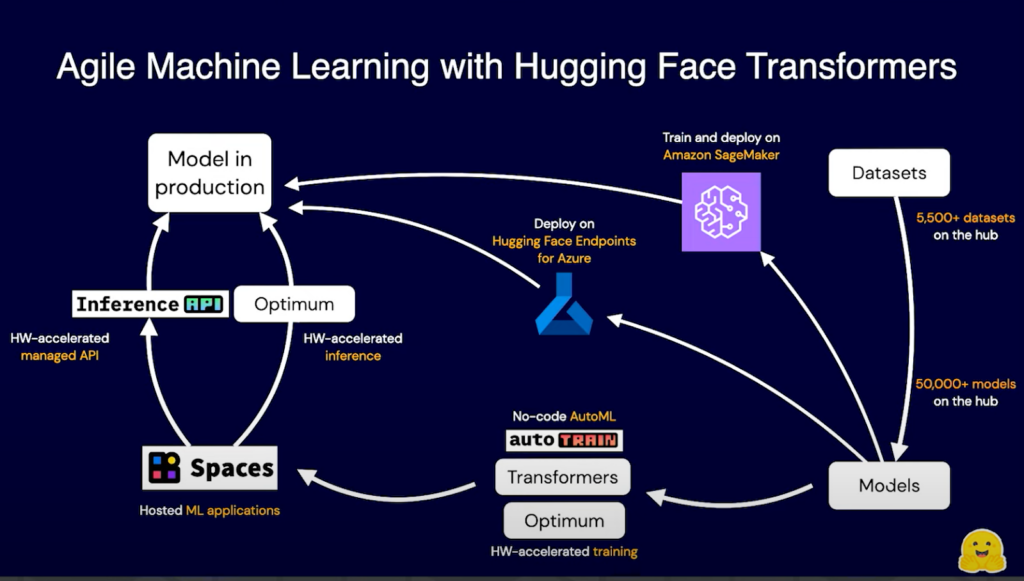

4. Hugging Face Transformers (Pre-Trained Models)

Hugging Face offers a vast library of pre-trained transformer models that can be downloaded and used offline.

These models cover many applications, including text generation, translation, summarization, and more. Once downloaded, users can run these models without needing internet access.

Key Features:

- A wide range of pre-trained models is available.

- It can be used offline once downloaded.

- Supports many NLP tasks such as question-answering, translation, and text generation.

Who Should Use It: Hugging Face models are ideal for developers and researchers looking for an open-source, offline alternative that’s highly customizable.

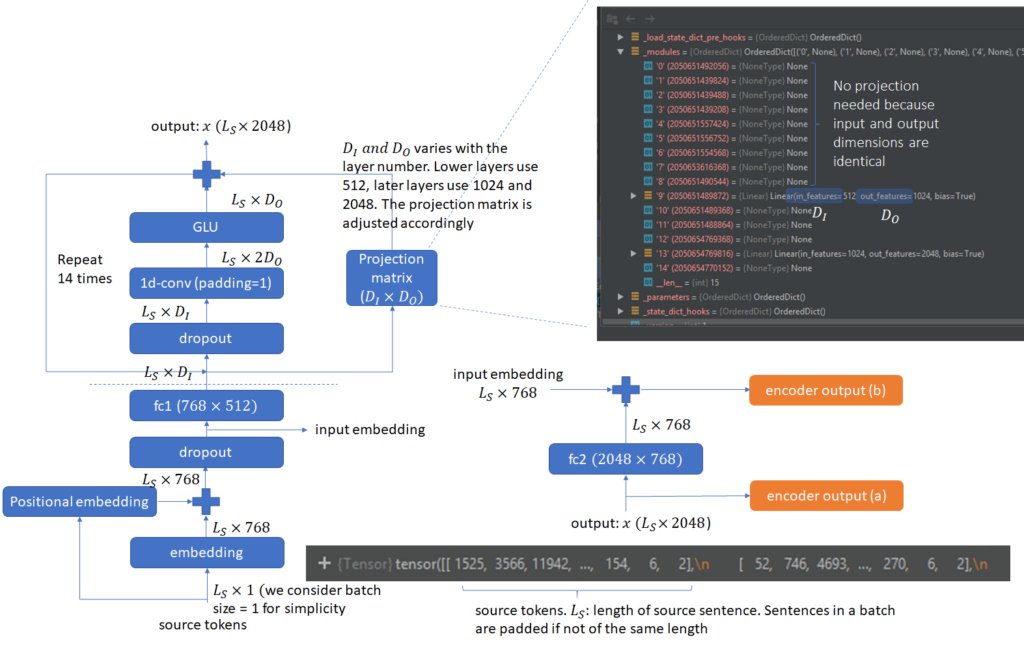

5. Fairseq

Fairseq is another powerful alternative developed by Facebook AI. This sequence-to-sequence framework can be used for translation, text generation, and summarization.

Although it’s mainly used in research environments, Fairseq can be run offline with proper configuration.

Key Features:

- Open-source, allowing for flexibility and customization.

- Capable of running offline for text-based tasks.

- Requires strong technical knowledge for setup and customization.

Who Should Use It: Fairseq best suits developers or researchers needing a highly customizable NLP tool that can function offline.

6. LLaMA by Meta

LLaMA is Meta’s large language model, smaller than GPT-3 but still powerful. It is a highly efficient model that requires less computational power, making it more feasible for offline use.

LLaMA is designed for researchers and developers who want a compact yet capable model for their language-processing tasks.

Key Features:

- Efficient in terms of resource usage compared to larger models.

- It can be run offline with a reasonably powerful setup.

- Suitable for various NLP tasks like text generation, summarization, and more.

Who Should Use It: LLaMA is ideal for users who need an offline solution but don’t have access to supercomputers. It works well for researchers and developers working on specialized language tasks.

7. Offline Transformers on Local Machines

Users can also run transformer-based models locally using frameworks such as TensorFlow or PyTorch.

Once downloaded, these models no longer require internet connectivity and can be used to perform tasks such as translation, summarization, and text generation offline.

Key Features:

- The wide availability of pre-trained models for offline use.

- It can be run on systems with sufficient GPU resources.

- Offers customization for different tasks, from question-answering to text generation.

Who Should Use It: Transformer models are great for developers who need flexibility and can manage the setup for running these models offline.

Benefits of Using Offline Language Models

Using offline language models like those mentioned above has several benefits:

1. Data Privacy: No data is transmitted to external servers, ensuring maximum privacy for sensitive or confidential tasks.

2. No Internet Dependency: You can use these tools without relying on an internet connection, making them ideal for situations where connectivity is limited or unavailable.

3. Customization: Since most offline models are open-source, users have greater control over how they function and can fine-tune them for specific tasks.

Frequently Asked Questions

Can you use ChatGPT offline?

No, ChatGPT itself does not have an offline version. It requires internet connectivity to process inputs and generate responses in real-time.

What are some offline alternatives to ChatGPT?

Some offline alternatives include GPT-Neo, GPT-J, Stanford Alpaca, and LLaMA. Although these open-source models can be downloaded and run on local hardware, they require significant computational power.

How much computational power do offline models like GPT-Neo require?

To run models like GPT-Neo or GPT-J offline, you typically need a powerful GPU, a lot of RAM, and a strong processor. These requirements make it challenging for users with regular consumer-grade hardware.

Is using an offline language model more secure than using an online service?

Yes, offline models enhance privacy because all data processing occurs locally, meaning no information is transmitted to external servers.

Do offline language models have the same functionality as online models like ChatGPT?

Offline models can offer similar functionality for tasks like text generation, translation, and summarization, but they may not be as powerful or updated as frequently as online models like ChatGPT.

While no fully offline version of ChatGPT is available, many open-source alternatives like GPT-Neo, GPT-J, and Hugging Face models offer similar capabilities without internet access.

However, running these models offline requires substantial computational resources and technical knowledge. For those willing to invest in the necessary hardware and setup, these offline options provide a viable solution for secure, internet-free natural language processing.